Written By Subhash Kak - December 3, 2019 7.58am EST

Will quantum computers ever reliably best classical computers? Amin Van/Shutterstock.com

Google announced this fall to much fanfare that it had demonstrated “quantum supremacy” – that is, it performed a specific quantum computation far faster than the best classical computers could achieve. IBM promptly critiqued the claim, saying that its own classical supercomputer could perform the computation at nearly the same speed with far greater fidelity and, therefore, the Google announcement should be taken “with a large dose of skepticism.”

This wasn’t the first time someone cast doubt on quantum computing. Last year, Michel Dyakonov, a theoretical physicist at the University of Montpellier in France, offered a slew of technical reasons why practical quantum supercomputers will never be built in an article in IEEE Spectrum, the flagship journal of electrical and computer engineering.

So how can you make sense of what is going on?

As someone who has worked on quantum computing for many years, I believe that due to the inevitability of random errors in the hardware, useful quantum computers are unlikely to ever be built.

To understand why, you need to understand how quantum computers work since they’re fundamentally different from classical computers.

A classical computer uses 0s and 1s to store data. These numbers could be voltages on different points in a circuit. But a quantum computer works on quantum bits, also known as qubits. You can picture them as waves that are associated with amplitude and phase.

Qubits have special properties: They can exist in superposition, where they are both 0 and 1 at the same time, and they may be entangled so they share physical properties even though they may be separated by large distances. It’s a behavior that does not exist in the world of classical physics. The superposition vanishes when the experimenter interacts with the quantum state.

Due to superposition, a quantum computer with 100 qubits can represent 2100 solutions simultaneously. For certain problems, this exponential parallelism can be harnessed to create a tremendous speed advantage. Some code-breaking problems could be solved exponentially faster on a quantum machine, for example.

There is another, narrower approach to quantum computing called quantum annealing, where qubits are used to speed up optimization problems. D-Wave Systems, based in Canada, has built optimization systems that use qubits for this purpose, but critics also claim that these systems are no better than classical computers.

Regardless, companies and countries are investing massive amounts of money in quantum computing. China has developed a new quantum research facility worth US$10 billion, while the European Union has developed a €1 billion ($1.1 billion) quantum master plan. The United States’ National Quantum Initiative Act provides $1.2 billion to promote quantum information science over a five-year period.

Breaking encryption algorithms is a powerful motivating factor for many countries – if they could do it successfully, it would give them an enormous intelligence advantage. But these investments are also promoting fundamental research in physics.

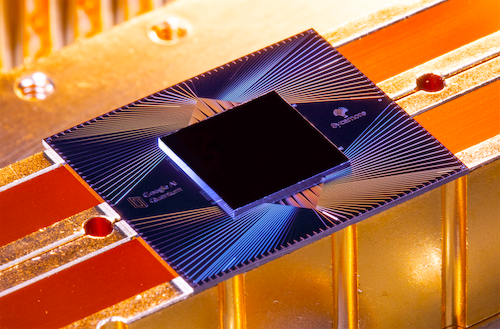

Many companies are pushing to build quantum computers, including Intel and Microsoft in addition to Google and IBM. These companies are trying to build hardware that replicates the circuit model of classical computers. However, current experimental systems have less than 100 qubits. To achieve useful computational performance, you probably need machines with hundreds of thousands of qubits.

Google’s Sycamore processor has only 54 qubits. Erik Lucero, Research Scientist and Lead Production Quantum Hardware, Google, CC BY-ND

The mathematics that underpin quantum algorithms is well established, but there are daunting engineering challenges that remain.

For computers to function properly, they must correct all small random errors. In a quantum computer, such errors arise from the non-ideal circuit elements and the interaction of the qubits with the environment around them. For these reasons the qubits can lose coherency in a fraction of a second and, therefore, the computation must be completed in even less time. If random errors – which are inevitable in any physical system – are not corrected, the computer’s results will be worthless.

In classical computers, small noise is corrected by taking advantage of a concept known as thresholding. It works like the rounding of numbers. Thus, in the transmission of integers where it is known that the error is less than 0.5, if what is received is 3.45, the received value can be corrected to 3.

Further errors can be corrected by introducing redundancy. Thus if 0 and 1 are transmitted as 000 and 111, then at most one bit-error during transmission can be corrected easily: A received 001 would be a interpreted as 0, and a received 101 would be interpreted as 1.

Quantum error correction codes are a generalization of the classical ones, but there are crucial differences. For one, the unknown qubits cannot be copied to incorporate redundancy as an error correction technique. Furthermore, errors present within the incoming data before the error-correction coding is introduced cannot be corrected.

While the problem of noise is a serious challenge in the implementation of quantum computers, it isn’t so in quantum cryptography, where people are dealing with single qubits, for single qubits can remain isolated from the environment for significant amount of time. Using quantum cryptography, two users can exchange the very large numbers known as keys, which secure data, without anyone able to break the key exchange system. Such key exchange could help secure communications between satellites and naval ships. But the actual encryption algorithm used after the key is exchanged remains classical, and therefore the encryption is theoretically no stronger than classical methods.

Quantum cryptography is being commercially used in a limited sense for high-value banking transactions. But because the two parties must be authenticated using classical protocols, and since a chain is only as strong as its weakest link, it’s not that different from existing systems. Banks are still using a classical-based authentication process, which itself could be used to exchange keys without loss of overall security.

Quantum cryptography technology must shift its focus to quantum transmission of information if it’s going to become significantly more secure than existing cryptography techniques.

Commercial-scale quantum computing challenges While quantum cryptography holds some promise if the problems of quantum transmission can be solved, I doubt the same holds true for generalized quantum computing. Error-correction, which is fundamental to a multi-purpose computer, is such a significant challenge in quantum computers that I don’t believe they’ll ever be built at a commercial scale.

Article written by Subhash Kak, and posted on the theconversation.com site.

Article reposted on Markethive by Jeffrey Sloe as a followup to my article that may answer the question, "Can Google's New Quantum Computer Hack Bitcoin?".