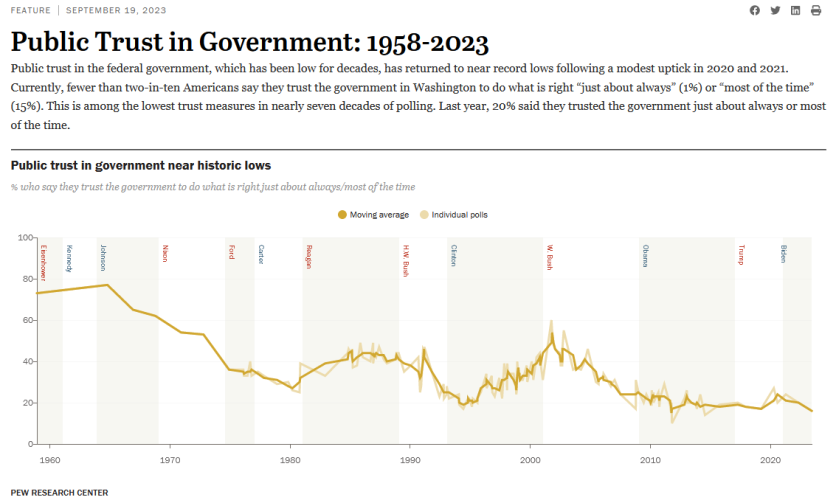

The erosion of confidence in governments globally has led to a surge in efforts to suppress dissenting voices and limit the dissemination of information deemed unfavorable by those in power. In other words, truths they don’t like. The recent Congressional Hearing on the Weaponization of the Federal Government has exposed the alarming extent to which some governments are willing to go to silence opposition and maintain their grip on power.

It has shed light on a disturbing trend: the integration of artificial intelligence (AI) that will enable the government to suppress what it considers as mis or disinformation with unprecedented efficiency and effectiveness. Using AI for censorship has created a dangerous alliance between government agencies and powerful corporations, further undermining the principles of democracy and the ability to seek the truth and speak the truth.

Source: Truthstream Media X

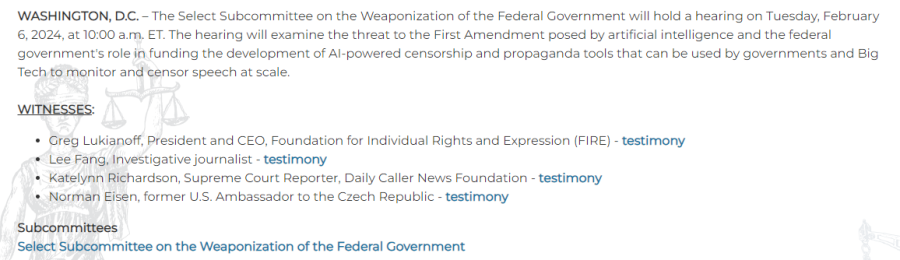

In early February 2024, a subcommittee hearing was held to discuss the recent attempts by governments and agencies to censor online content, posing a threat to the fundamental right to free speech. This summary highlights the key points and issues addressed during the hearing and ways to resist these efforts and safeguard your constitutional freedom of expression.

To begin, a brief overview is needed. A political committee at Capitol Hill focuses explicitly on examining how the government's powers are being utilized as weapons. This committee was formed in January 2023 and has stirred up much debate. This is mainly because most of the subcommittee's discussions have centered on critiquing the current administration's utilization of governmental authority. While these discussions may seem biased, the substance of the dialogue holds value.

According to the Judiciary Committee's website, the hearing focuses on the federal government's role in funding the development of high-powered censorship and propaganda tools that governments and big tech can use to monitor and censor speech at scale.

Source: Judiciary Committee

From all accounts, the hearing was convened in response to a recent exposé by The Daily Caller, which revealed that the National Science Foundation (NSF), a separate government agency in the US, had allocated $40 million towards developing online censorship tools. The report's author, Katelynn Richardson, suggests that this investment is part of a more considerable effort by the U.S. government to create a "censorship industrial complex" through the funding of AI research initiatives.

Just so you know, governments globally are following suit and implementing similar measures to curb online freedom. This article provides an overview of the various internet censorship laws enacted worldwide.

Katelynn was among the four individuals who provided testimony. The other three witnesses were Greg Lukianoff, CEO and President of the Foundation for Individual Rights and Expression (FIRE), Lee Fang, an investigative journalist known for his work on the Twitter files, which exposed collaboration between big tech and governments to silence dissenting voices, and Norman Eisen, a former U.S. Ambassador to the Czech Republic.

It's worth noting that although the motivations behind this hearing were likely partisan, the information that came to light is crucial for everyone to be aware of, regardless of their political affiliations or geographical location.

The utilization of these powerful instruments is not limited to any particular administration or government. Consequently, it's essential to recognize that the same tools employed against individuals you disapprove of today could be directed toward you in the future. The notion that you may hold a non-partisan stance does not necessarily ensure your immunity, as historical patterns suggest a tendency towards the escalation of such measures. Some might argue that we're currently witnessing the manifestation of this phenomenon.

Source: Judiciary.House.gov

The hearing commenced with opening statements from politicians from both Republican and Democrat parties. Chairman Jim Jordan was the first to address the assembly, and he began by enumerating instances where the U.S. government had collaborated with major technology companies to suppress legitimate speech. His list was extensive and included several notable examples. Additionally, he drew attention to a recent investigative report that revealed the U.S. government had pressured Amazon to restrict specific book titles on its platform during the pandemic.

Jim expressed concern that censorship tactics are advancing with generative AI technology, which has gained widespread attention. He referenced quotes from studies on censorship funded by the U.S. government, highlighting the potential for these tools to circumvent legal responsibility by operating through automated programs rather than individuals subject to legal repercussions.

However, the most incriminating statement highlighted the fact that certain studies are deliberately aiming to reach rural and indigenous communities, veterans, older adults, and military families through their internet censorship mechanisms, as they believe these groups are the most susceptible to mis and disinformation. It insinuates these minorities are too stupid to know the truth. Or perhaps these demographics often place their faith in entities beyond the government's control, posing a challenge for those in power.

Jim then disclosed that another proposal document stated that reactive content moderation (usually performed by humans) is too slow and ineffective. The focus is, therefore, on certain tools that implement proactive censorship, meaning that any online content you post will be censored before you complete typing it. Notably, these are the types of concepts that have been extensively debated at the World Economic Forum's yearly gatherings.

Ranking member Stacey Plaskett was the next speaker, and she rejected Jim's remarks as a conspiracy theory. She expressed her frustration that she has to sit through repeated discussions on the subject, six times to be exact, emphasizing that the real weaponization of the government occurred during the prior administration.

Source: Video of hearing

The witnesses had their chance to share their opening statements, free from the influence of partisan agendas. First, Katelynn Richardson began by expressing her concern about the U.S. government's potential involvement in creating a censorship-industrial complex. She then disclosed that numerous censorship studies she uncovered last year are still in progress.

Katelynn pointed out that a censorship-industrial complex is rising as a new sector receiving substantial government funding, not unlike the expanding crypto compliance industry aimed at satisfying the Financial Action Task Force (FATF). She noted that she has questioned these entities about their studies. Katelynn also mentioned that she had challenged these institutions about their research. In response, they initiated a marketing campaign to justify their censorship efforts as a means of safeguarding democracy. This excuse has become a common justification for government overreach in recent times.

Lee Fang, the second witness to testify, discussed the potential for AI to facilitate unparalleled levels of censorship. He drew from his personal experience working on the Twitter files to illustrate how large pharmaceutical companies and their affiliated organizations have partnered with big tech to suppress online content.

Lee recently shared that although these programs were paused after the pandemic, they were reinstated around the middle of last year. He pointed out that various tools created by governments to combat terrorism are now being utilized against their own people. He cautioned that these tactics could be exploited by whoever was in power to silence political adversaries and emphasized the need for a nonpartisan approach to the committee's work.

Greg Lukianoff was the third witness to give testimony. He described how FIRE works to protect free speech rights from government interference nationwide, advocating for both right-wing and left-wing causes. He warned that the battles they have fought so far will seem insignificant compared to the challenges that lie ahead with the emergence of AI. Additionally, Lukianoff highlighted the issue of AI alignment, which, in theory, involves teaching AI to follow instructions but, in practice, often translates to teaching AI to comply with government dictates.

Greg emphasized that artificial intelligence (AI) is merely a tool that can enhance freedom of speech, much like the internet. He drew an analogy between the internet, AI, and the printing press, which brought about the downfall of religious control over governments. However, Greg noted that this is only possible if AI can develop freely in a decentralized environment without excessive regulation. Over-regulation risks undesirable consequences such as censorship and may hinder the United States' technological competitiveness.

Norman Eisen took his turn to speak, displaying a strong bias typical of such hearings by passionately advocating for government collaboration with major tech and AI companies. He dismissed accusations of fearmongering from his fellow panelists and asserted that it is permissible for the government to engage in such partnerships. Furthermore, he stressed that the government has the right to express its reservations to tech giants and to fund various AI initiatives. Eisen also revealed his initiative to provide a scholarship for individuals interested in delving into the intersection of AI and democracy.

Thomas Massie [R] was the first to pose a question during the session. He directed his inquiry to Lee, who disclosed a shocking revelation regarding the government's utilization of AI censorship initiatives. A program is in place that employs the creation of AI-powered social media bots designed to engage in arguments with individuals whom the government deems to be propagating false or misleading information.

In other words, if you have ever voiced an opinion on social media that contradicts the government's stance and subsequently received a barrage of responses from suspicious-looking accounts, these were likely AI bots funded by the government.

Regrettably, the left-leaning politicians chose to overlook the AI censorship problem caused by the current government, dismissing it as a futile concern. Instead, they emphasized the perceived bleak and harmful consequences for the United States if Donald Trump were to win the presidential election. This biased stance was unproductive and not deserving of attention here; however, you can find the complete hearing by following this link, which is worth viewing. Following my initial frustration, I found myself chuckling.

Kelly Armstrong [R] presented a comprehensive list of the U.S. government for research on censorship and inquired with Katelynn about a specific grant aimed at training students for job roles related to disinformation. This initiative represents what Katelynn referred to as the censorship-industrial complex. She affirmed that this program was indeed a summer internship opportunity for students who had received government funding. Kelly then questioned her about the curriculum focusing on recognizing misinformation.

Katelynn said there were many things, but the primary emphasis was on election-related information. The fact that more than 4.2 billion individuals worldwide are expected to participate in elections this year is quite alarming. It appears that governments are determined to maintain the status quo by supporting established candidates. The question then arises: Why have they yet to introduce a transparent blockchain voting system? The likely answer is simple enough to figure out.

After an unrelated partisan commentary and one question directed at Norman Eisen about Trump from anti-crypto politician Stephen Lynch [D], Jim questioned Lee Fang about his opening statement, specifically regarding a 2012 hearing on government funding for censorship programs. Jim confirmed that this was indeed the case and that the opposing political party was pushing for online censorship at the time. However, Greg Lukianoff pointed out that the tables have now turned and that one should not celebrate using these tools, even if they are being used against individuals they dislike, as they could potentially be used against them in the future.

The queries continued, with John Garamendi [D] taking his turn. Similar to his political peers, his inquiry was tainted with partisanship. Nevertheless, he disclosed a noteworthy detail: conservative groups have devised a strategic plan called Project 2025 to reform the U.S. government. This information is significant because it suggests that the growing opposition globally may not be as spontaneous as it appears and seems to be controlled.

In plain terms, the various individuals and organizations claiming to challenge the current state of affairs are, in fact, integral to the same dominant system they purport to oppose. A prime illustration of this phenomenon is that numerous purported opposition leaders who have risen to power in various nations are affiliated with the World Economic Forum (WEF). This article reveals how the WEF maintains programs designed to assist their preferred candidates in attaining elected office globally.

It is improbable that Project 2025 has any connection to the World Economic Forum (WEF), considering the initiative is reportedly led by the Heritage Foundation, whose president gained widespread attention for criticizing the WEF at the Davos gathering.

Following some biased inquiries from Darrell Issa [D] and Stacey Plaskett [D], Greg Steube [R] queried Lee about NewsGuard, an organization that assesses the accuracy of news sources. Lee indicated that he had devoted considerable effort to researching NewsGuard and described it as part of the expanding misinformation industry.

Lee discovered that NewsGuard has secured military agreements and is exerting an impact on how traditional media covers topics related to foreign policy. This development is not surprising given the history of the CIA's Operation Mockingbird. This program was used to manipulate US media outlets for propaganda during the Cold War. Although Operation Mockingbird officially ended in the 1970s, many believe that its practices continue to be employed in some form today.

Following a series of partisan questions from Representatives Sylvia Garcia [D] and Dan Goldman [D], who claimed there’s no evidence of government coercion regarding social media censorship and reiterated the committee was a useless waste of time and “the true weaponization, the threat of weaponization of the federal government is Donald Trump and the Republicans, and we should move on from this charade.”

The chairman, Jim Jordan, interjected to point out that Dan Goldman had previously acknowledged in a related hearing that the U.S. government had been requesting big tech companies to remove certain content but that these companies had only complied with such requests 35% of the time.

Kat Cammack [R] reinforced Jim's message by stating she has tangible evidence of the censorship previously orchestrated by the present government and agencies. She also emphasized that the purpose of the hearing was not to engage in political posturing but to confront the growing threat of digital authoritarianism emanating from the US government. She stressed that this issue affects everyday citizens and warned that those who assume they are immune will be caught off guard.

Despite this, the hearing continued to be impured by partisan bickering, with Stacy Plaskett [D] leading the charge and Harriet Hageman [R] attempting to set the record straight. Harriet also highlights that the upscaling of AI technology can provide censorship operations, and the scope of it is astonishing. Quoting an example of one company’s pitch to the NSF boasting that it was using AI to monitor 750,000 blogs and media articles daily as well as mining data from the major social media platforms.

Pro-crypto politician Warren Davidson [R] finally had his turn to speak. He brought up a topic that other speakers avoided discussing: the potential creation of a digital identification system by the US government. Warren then turned to Greg for data supporting the claim that censorship is increasing. Greg shared a surprising statistic - 2020 and 2021 recorded the highest number of college professors terminated in the United States since the 1930s.

Greg highlighted that professors across the political spectrum have been affected. He continued to assert that removing academics who resisted conforming to the prevailing norms amid the pandemic has paved the way for a potentially dystopian future in artificial intelligence. Greg pointed out that the remaining scholars, who are now working on AI censorship technology funded by the government, have all complied with the imposed expectations.

Following some politically charged remarks by Jim, Russell Fry [R] made a striking observation. He pointed out that to secure government funding for research related to censorship, one must actively seek it out. This implies that individuals who receive such grants are, in effect, proactively seeking to expand their censorship capabilities from the get-go.

Russell sought Greg's insight on addressing the issue, and Greg's response was illuminating and unexpected. Contrary to popular belief, Greg argued that relying on regulations would not be effective, as it would inevitably lead to further centralization and increase the risk of future control mechanisms. Instead, the key to resolving this problem lies in decentralization, specifically developing and implementing decentralized AI systems that preclude any single entity from wielding such control.

Image by Markethive.com

The pressing concern now is how to counteract the growing online censorship trend. The first step is to acknowledge the importance of presenting truthful information in a composed and well-reasoned manner, supported by logical arguments and factual evidence. By doing so, we can effectively communicate our message and create a more informed public discourse.

Frequently, individuals try to communicate the truth using exaggerated language, offensive remarks, and other content that may be considered offensive, which can lead to censorship regardless of the message's intent. While some argue that individuals should be free to express themselves in such ways, the reality is that such language may not be tolerated in all cultures and societies. In some countries, like the US, there is a greater emphasis on freedom of speech, but in other parts of the world, there are stricter guidelines around what can and cannot be said.

The second approach is to choose which issues to address carefully. As many people do on specific platforms, there is no point in screaming about an issue into the void. Similarly, arguing with government-backed AI bots supported by the government is usually unproductive. If it is unlikely that anyone's perspective will shift, it is better to refrain from the discussion. Instead, consider sharing your concerns with someone open to listening and empathizing. It is more effective to communicate truths with individuals you are familiar with, as this may lead to the information being disseminated effectively.

This relates to the third point, recognizing that the type of AI-based censorship many governments implement can only occur on specific social media platforms. Engaging with like-minded individuals in person or online makes you less likely to experience its effects. However, it is essential to avoid isolating yourself within an echo chamber.

It's crucial to recognize that avoiding the issue of censorship won't make it disappear. It can eventually impact you if left unaddressed, even if you try to ignore it. Unfortunately, it might not always be possible to fight back. Fortunately, though, you don't always need to.

This pertains to the fourth solution, which involves utilizing alternative platforms that are not vulnerable to online censorship being imposed. Although centralized platforms that uphold free speech still exist, they are under increasing scrutiny by authorities and will likely have to adhere to regulations. As a result, decentralized platforms have emerged as the sole alternative, providing an unrestricted space for online interactions.

This article about the narratives of the next crypto bull market illustrates that decentralized social media is becoming a prominent force as the authorities and bureaucrats will do anything and everything to dumb us down and use taxpayer dollars to do it. So, we are essentially funding our own censorship, and it’s not just in the pursuit of revealing truths online but also in asking questions that require answers that don’t follow their narrative. People may have to adopt decentralized media out of necessity.

This pertains to the final point: voting for leaders committed to protecting free speech as it is a fundamental right that supersedes all else. Despite some people's dismissive views, the significance of free speech cannot be overstated, which is why it is enshrined in the First Amendment of the United States Constitution.

Without free speech, it becomes difficult to seek the truth, and when you don't know the truth, it becomes challenging to live per reality, which is based on the truth. When it becomes difficult to live in reality, society starts to collapse, and when society starts to collapse, everyone loses, eventually, including those in positions of power.

In a nutshell, combating censorship involves sharing truthful information in a manner that resonates with people, exploring alternative channels when online avenues are restricted, and supporting political candidates who champion free speech to prevent such limitations from arising. For many individuals, 2024 presents a critical opportunity to exercise their democratic voice before this dystopian nightmare takes hold.

Thank God for Markethive!

Also published @ Substack.com, BeforeIt’sNews.com, and Steemit.com