A Memory Revolution poised to change FSI (and much of computing) forever.

A Memory Revolution poised to change FSI (and much of computing) forever.The internet of Things has been around for some years now and despite there being almost unlimited applications its archilles heal is the limited life of the batteries needed to keep memory chips from discharging all their data once th battery goes flat.

There are obviously two possible solutions:

This article focuses on the second option and was written by James Reinders 22nd Oct and fouses on the more technical aspects .

Financial Services Businesses thrive on being faster or better than their competition in what they do best, and computer systems are obviously instrumental in that. There is a new memory technology, that is poised to change FSI, and much of computing, forever. And in the nick of time — DRAM scaling has been slowing to a crawl (processor clock scaling slowed more than a decade ago).

Ask any programmer if they would like more memory for their FSI application, and chances are they would say “yes.” Of course, they would worry that there is a catch. So far, the catch has been that bigger memories are either too expensive, consume too much power, or are too slow. More power means more heat, and every server room has its limits.

All of that is changing thanks to non-volatile, or persistent, memory. Leading the charge is Intel Optane DC Persistent Memory. Imagine being able to expand memory without the extreme power consumption associated with DRAM memories!

Will this really change FSI forever? I think so, and I will explain why.

Financial Services Businesses thrive on being faster or better than their competition in what they do best, and computer systems are obviously instrumental in that. There is a new memory technology, that is poised to change FSI, and much of computing, forever. And in the nick of time — DRAM scaling has been slowing to a crawl (processor clock scaling slowed more than a decade ago).

Ask any programmer if they would like more memory for their FSI application, and chances are they would say “yes.” Of course, they would worry that there is a catch. So far, the catch has been that bigger memories are either too expensive, consume too much power, or are too slow. More power means more heat, and every server room has its limits.

All of that is changing thanks to non-volatile, or persistent, memory. Leading the charge is Intel Optane DC Persistent Memory. Imagine being able to expand memory without the extreme power consumption associated with DRAM memories!

Will this really change FSI forever? I think so, and I will explain why.

.png)

As I wax nostalgic, I recall that the first computers I programmed had only non-volatile memory. It was called core memory, and it was common back then. The year, 1977. The system, DEC PDP/8e. We never had to wait for the computer to boot, because the memory kept its contents even when the power was off. We only had to reload the operating system if some program overwrote memory it wasn’t supposed to change. They hadn’t perfected memory protection, but it did have a front panel! Those were the days! Oh…

Today, semiconductor technology has finally built a non-volatile memory that is price, density, and performance competitive with DRAM memory (which is the memory that almost all computers use).

It has taken some time to take on DRAM. SRAM (static random-access memory) has been around longer than DRAM. SRAM uses less power, and offers higher performance, than DRAM. However, SRAM also losses all content when power is removed like DRAM, is not as dense, and is much more expensive than DRAM.

We have had flash memory for some time now as well. In addition to non-volatile memory on many devices, flash has made possible solid-state disk drives (SSDs) filled with flash memory. While non-volatile, and dense, it has not been fast enough to compete with DRAM. And, it has had limitations in how often data in flash could be changed before it wore out.

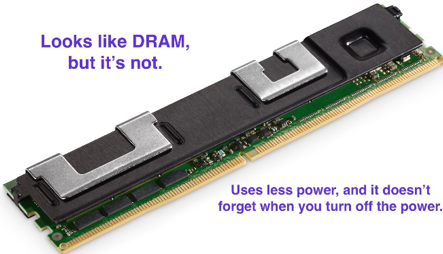

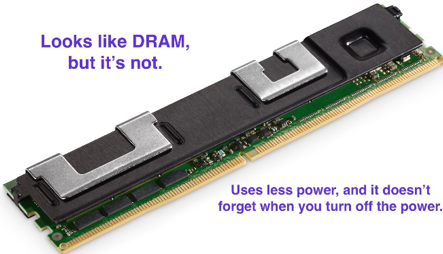

With Intel Optane DC Persistent Memory, there is now a memory technology that is lower power than DRAM, and can be used on the CPU’s main memory bus like main memory.

Dynamic Random-Access Memory (DRAM) has been around so long, many people have forgotten what the ‘Dynamic’ means. In a clever trick of engineering, memory cells are made very small but they do not remember data for very long. This is because the data stored in them fades away (the charge leaks away). A refresh is needed to charge them up again, before the memory fades. All modern DRAM needs to be refreshed multiple times per second for every memory location! Every bit in the entire memory system is read and written repeatedly even when the computer is doing nothing else!

As bad as this may sound, DRAM has been the preferred solution for main computer memory for decades. However, DRAM is now hitting a wall. Due to effects that are beyond this little article, the bigger DRAM chips get, the higher percentage of power and time needs to be spent (effectively wasted) on refreshes.

In other words, the scaling of DRAM is slowing now much like we saw processor clock rates slow starting a decade ago.

Even if we have the power and money to burn, DRAM is not the only technology for the future.

Analytics, and many other applications, love in-memory data. The bigger the memory, the better. Any high frequency, or steaming data analysis, can benefit from more memory because it can greatly reduce latency experienced by the application. Lowering a critical time-to-answer can translate in less risk, and more profit. In-Memory Databases, Virtualization, and Performance Storage top the list in terms of “what will benefit from persistent memory the most” and this can impact a wide range of FSI applications include machine learning and risk analysis workloads.

A time series database utilized by FSI is kdb+. Recent published results showed more than 2X performance improvements (geomean, the “max” was higher) on STAC-M3 Antuco and Kanaga benchmarks. These “tick database stacks” test both I/O (Antuco) and volume scaling (Kanaga).

With promising results like this, it is no surprise that there are many people expecting more great things for their own workloads. A significant increase in memory size, whether persistent and/or volatile, positively impacts the performance characteristics of applications and their design. Even without this new persistent memory, it is not uncommon to see compute grids adding large memory instances to enhance computational finance and machine learning use cases. It only stands to reason that the affordability of Intel Optane DC Persistent Memory will lead to more systems being built with much higher memory capacity. Significantly increasing memory size allows for much more data to remain in memory at once, such as holding a quarter or two of historic data instead of single month of data. With more data in memory, compute capacity can grow much faster than the footprint of servers which can ease both space and power consumption growth — music to anyone with a large server room already! Developers using the Persistent Memory Developer Kit (PMDK) are helped in using persistent memory for logging, transaction capture, and rehydration of static data.

.png)

Intel Optane DC Persistent Memory fits in DIMM slots and uses the memory bus for communication with CPUs, giving it an equal footing with DRAM when connecting to the CPU. An SSD, using the same 3D XPoint memory, has to use the PCIe bus for communication with CPUs. That is slower and lower bandwidth than the memory bus. The latency difference is two to three orders of magnitude (100–1000X), and the bandwidth difference is more than 3X (see the above graph). Unsurprisingly, this allows for significant differences in application performance.

.png)

Intel Optane memory comes in the familiar DIMM format, and plugs into the same DIMM socket that DRAM uses.

It does require that the hardware and software understand it — something that the latest systems do offer!

Photo: Courtesy of Intel

DRAM and persistent memory are two memory technologies that serve slightly different purposes in the memory hierarchy of a system today. For FSI, it seems likely that future orders will want some of both memory technologies in their systems. For now, Intel Optane memory complements DRAM, rather than replacing it entirely. As the benchmarking of kdb+ demonstrate, computers with both Intel Optane memory and DRAM can access programs and data faster, providing additional performance and responsiveness.

I’ve heard it summed up as “much of the computing world changes if you can assume that memory is persistent.” I think you’d have to agree. With DRAM scaling slowing, and the associated rise in power being wasted if we push for more DRAM, it is obvious we need a better way forward. Thankfully, with Intel Optane DC Persistent Memory there is an answer. If you want to read more, Intel has a web-site with a great deal more information for developers considering using persistent memory, including a version of the Jeopardy game to test your knowledge.

Yes, computer architecture is once again changing forever. Thankfully, we can add non-volatile memory to our systems without anyone having to wrap little wires around, and through, little ferrite donuts.